Hi, I'm Yash!

I'm a Ph.D. candidate in Electrical & Computer Engineering at Cornell, advised by Mohamed S. Abdelfattah and a Student Researcher at Google Research.

I work on RL4E (teaching language models to make themselves more efficient), and universal regression.

Feel free to reach out if you'd like to chat AI, hardware-software co-design, or cool research ideas!

Research Directions

Universal Regression

We found that you can use language models to perform regression directly on unstructured text data, like system logs, to predict numerical outcomes. To give a sense of scale, the regressors can nearly perfectly capture the behavior of a cell of servers, sometimes as large as a building!

The cool part is that instead of just predicting a single number, the model learns to output the full probability distribution. This means it can capture complex or even multi-modal shapes in the data, reflecting the true range of possible outcomes.

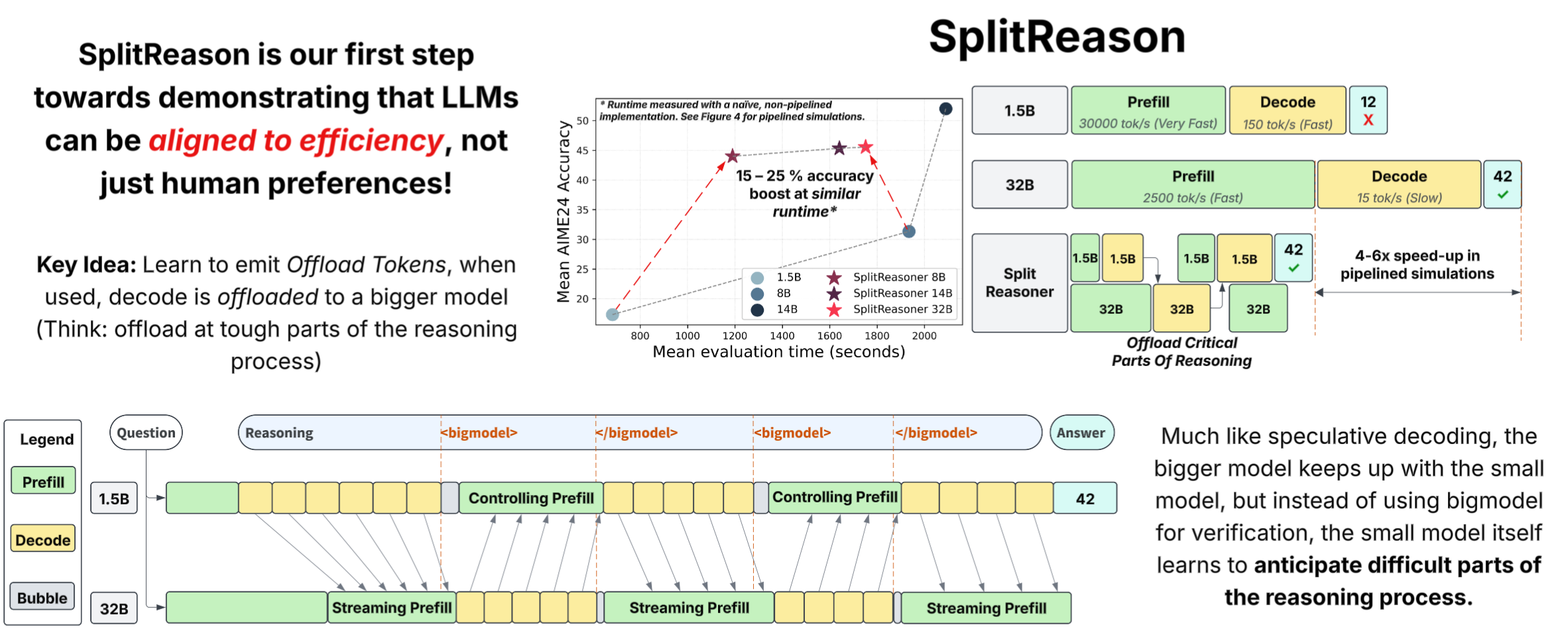

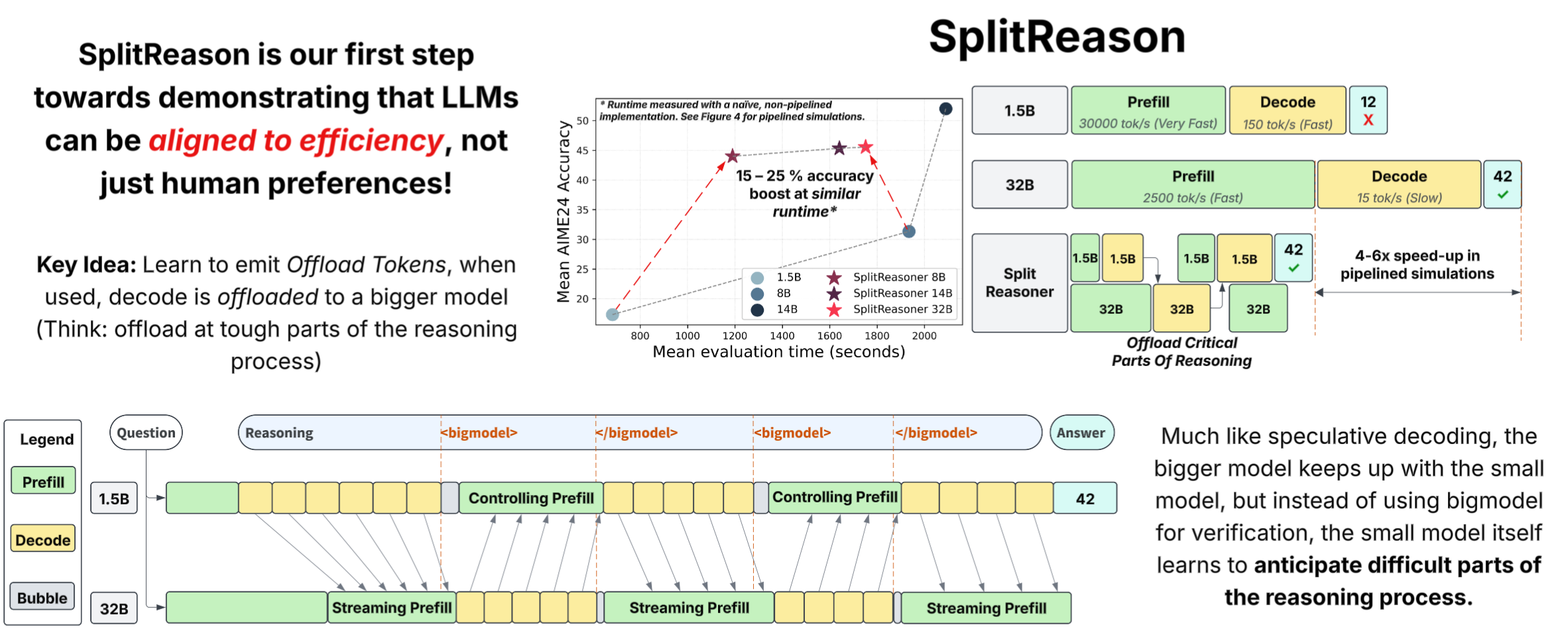

RL4E: Reinforcement Learning for Efficiency

We teach a small model when to 'call' a larger language model for decode on hard reasoning tasks. This is just a demo, but the broader goal is to 'teach models' to make themselves more efficient, by deciding when to use local attention, quantization, sparsity based on what they are thinking about.

SplitReason uses a special token to call a larger model, in the same way, we can get creative and make more special tokens, such that the model can control its own generation.

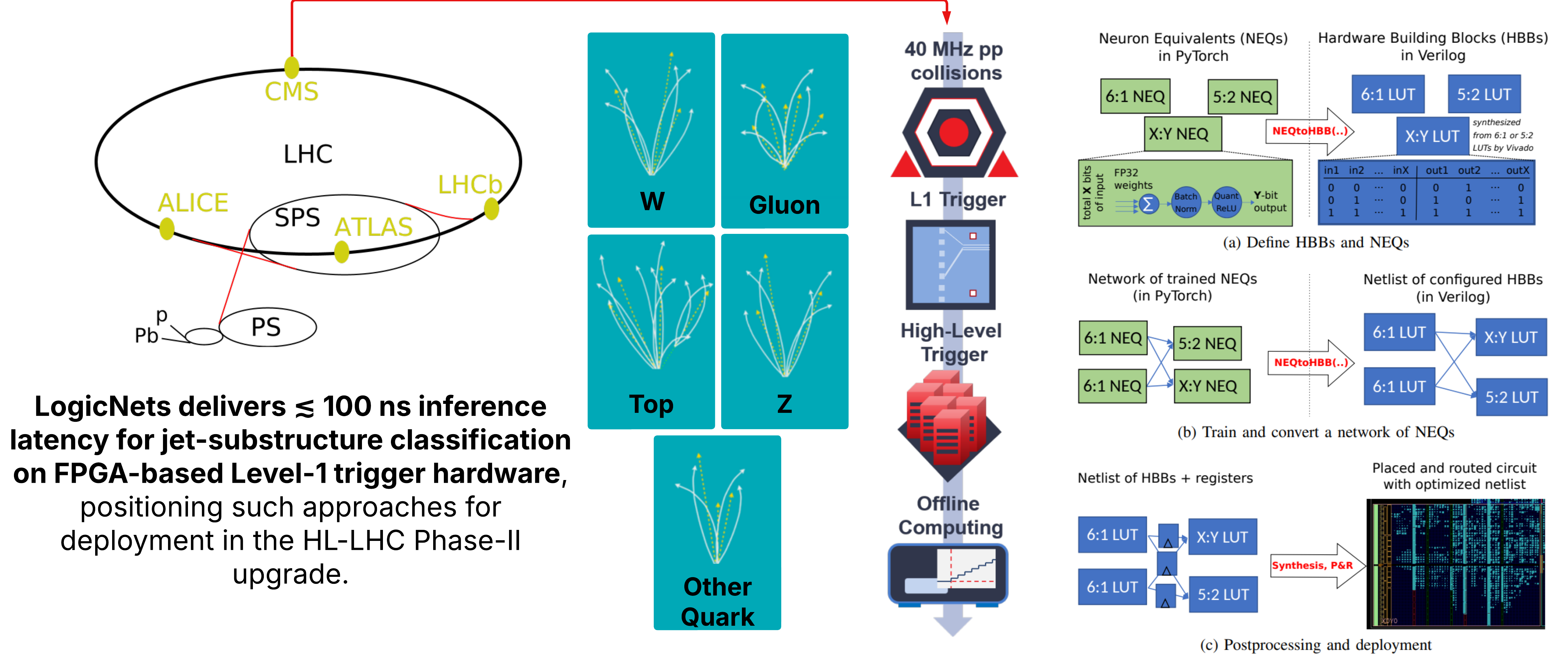

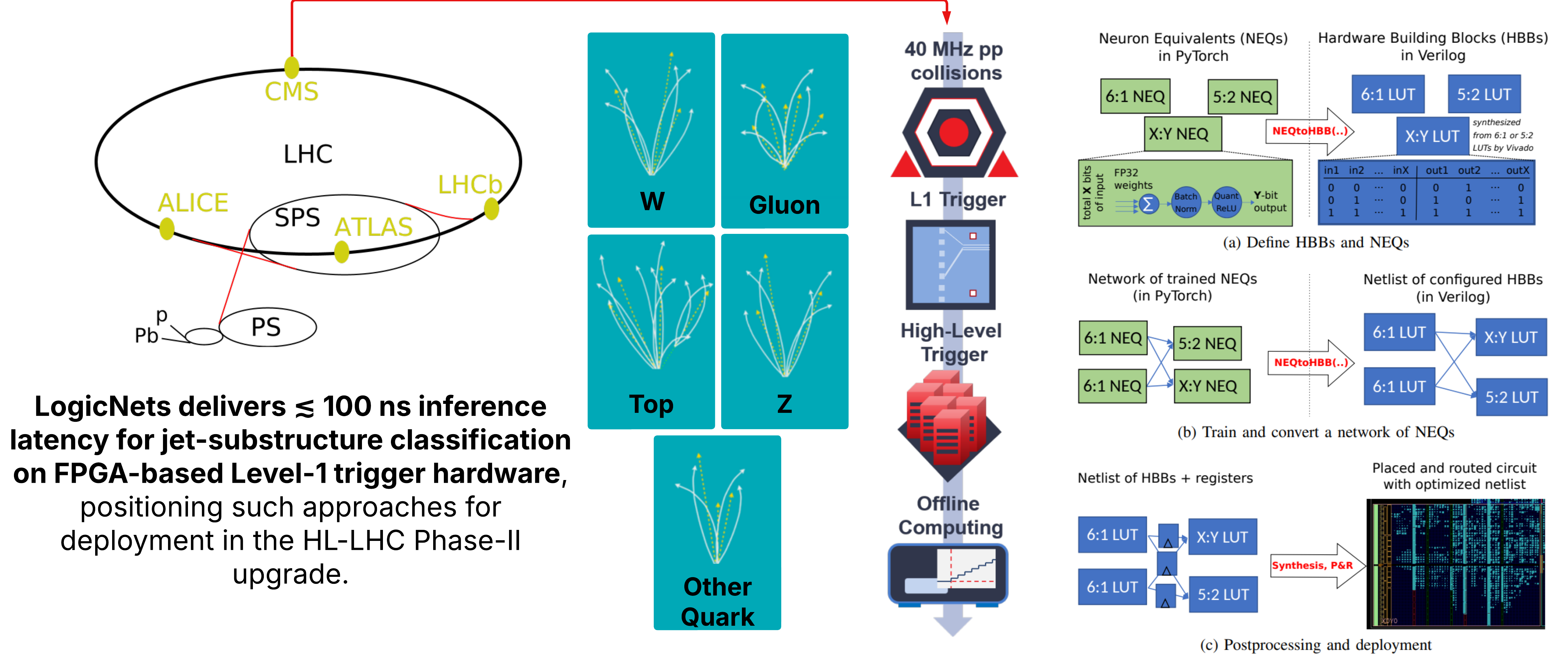

Mapping Neurons to Hardware

By using extreme sparsity and quantization, LogicNets turns each neuron into a tiny bundle of logic gates, porting an entire deep network into native FPGA fabric—no CPUs, no firmware loops. Just pure, automatic neuron-to-hardware mapping.

The whole jet-tagging model streams through in under 15 ns, sustaining hundreds of millions of inferences per second, so the ATLAS or CMS Level-1 trigger can make classifications in real time and still have head-room for everything else on the board.